The use of catastrophe models to price catastrophe excess of loss business has become so pervasive that many observers could be forgiven for believing that all one has to do is license the right models and press a few buttons to calculate the 'risk' price of any contract. But, models are only models - simplifications or idealisations of reality. As was apparent after the storms of 2004 and 2005, inherent uncertainties and differences in the modelling process and data availability mean that results are not absolute, and that model outputs often vary substantially between vendors. In addition, outside the primary capital-driving markets, and for many perils, model coverage is incomplete.

Underwriters need to be able to make informed judgements about the various internal and vendor model views of risk. This requires an understanding of a model's limitations and unique properties, as well as how other factors can have an impact on the result, such as the way in which insurance conditions translate into model numbers. As a result, reinsurance pricing is increasingly a team effort, with actuaries, modellers and underwriters working together to determine their best estimate of the correct price for a transaction.

What, then, are the critical elements that a catastrophe risk pricing team must work through when making the all important link between model output (the modelled loss expectation for a portfolio) and price?

Underwriting using catastrophe models

We can outline an ideal procedural list for dealing with model output as below.

1. Identify all possible perils that could affect the transaction.

2. Estimate a pure risk premium for each peril, whether there is a sophisticated catastrophe model for the peril or not.

3. For each peril, refine the risk premium estimate based on a deeper analysis, particularly where multiple modelled views and independent information sources are available.

a. Assess model credibility for the modelled perils using additional information from outside the models.

b. Identify aspects of the loss process that are not modelled and quantify their potential influence.

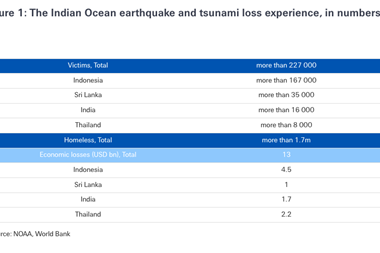

Perhaps this sounds relatively straightforward, but in reality, as an industry we are still constantly surprised by losses where the associated peril was not modelled, (9/11, SARS, the 2004 Asian tsunami and Hurricane Katrina flood claims), or by events with losses that far exceed the expected modelled loss.

Under-estimation of loss potential can occur for a number of reasons, including:

Failure to include all possible perils

The underwriter should be careful not to ignore potential catastrophe perils that seem unlikely, or which would be too hard to model with the available tools. Good examples are volcanic eruption and tsunami in countries such as Japan and New Zealand.

Inherent model assumptions

The second cause of surprise effects is more insidious. Catastrophe models make a series of basic assumptions to simplify reality. As a result, processes which could increase insured losses may be either completely ignored or modelled in a way that potentially reduces their impact. Underwriters need to familiarise themselves with these assumptions.

While it is not possible to give a comprehensive list of necessary modelling adjustments, the remainder of this paper identifies a set of key sources that should lead to adjustment, including practical examples for each.

Adjustment sources

We will first examine the model calculation of an insured loss. It is important to separately analyse the components of a catastrophe model, because there is potential for two models to produce superficially similar views of catastrophe risk through a fortuitous combination of different hazard and vulnerability assumptions.

The hazard component

Uncertainties in the hazard component can be driven by a wide range of factors, which vary between perils. In an ideal world, if we had enough data, this epistemic uncertainty would be eliminated.

Our knowledge is, however, incomplete, and there are a number of basic questions that we must ask:

Do the catastrophe events that we are modelling occur uniformly over time, or are they becoming more or less frequent? Consequently, is the past a good enough basis on which to model the present/future?

The drivers behind increased Atlantic storm frequency and the magnitude of the changes are still being debated. However, weather perils may well be associated with climate change, and, therefore, it is hard to be confident that catastrophe models produced using historic data for the frequency and intensity of storms and floods accurately represent current conditions.

How will I mathematically represent the loss process to be modelled? What are the potential weaknesses of my chosen modelling methodology?

This point requires a more detailed explanation. Vendor models previously approached European windstorm in three distinct ways. One approach was to reconstruct events using ground based wind measurements, and then to carefully adjust and move these events around to create a large event set. In comparison, a second vendor's methodology was to carefully analyse data from over 100 years of storm activity and to use it to populate a probabilistic model covering the directions, locations, intensities and other features of geometrically simplified storm events. A third vendor chose a physical model based on weather forecast technology driven by a far more limited dataset of detailed atmospheric measurements.

Some clear implications of these differences are as follows. The second methodology had clearly defined probabilities for each simulated event, but less realistic wind fields. In comparison the first and third methodologies had realistic wind fields, but difficulty in being sure that they had created the full range of possible storms and assigned them realistic probabilities.

The technologies used for European windstorm models have advanced, but there are still some subtle differences resulting from the selection of method used to represent and simulate windstorms within each model.

How precisely defined are the parameters that need to be specified for the mathematical model that I have formulated?

A further point that is separate from, but related to, the choice of model is parameter uncertainty. For example, in an internal study we analysed the effects of parameter uncertainty on the estimated return period of Iniki, a hurricane that hit Hawaii in 1992 just before Hurricane Andrew hit Florida. We used historical hurricane data and a Bayesian approach to define the probability distribution of parameters for the extreme value distributions used to describe hurricane intensity. With this approach it was possible to create thousands of 'potentially valid catastrophe models' and to use them to estimate the return period of the reference loss that we had calculated for a reconstructed Iniki event.

The result of the analysis was that parameter uncertainty in respect of hurricane intensity alone led to a widely ranging return period of between 46 and 227 years (95% confidence interval). Obviously, details of the modelling process may reduce this uncertainty, but in the case of this study, the main source of uncertainty was that only 50 years of data were used to estimate the probability of an event that most likely has a return period of around 100 years.

The vulnerability component

Vulnerability models are often cited as a major source of uncertainty. For example, differences in the classification of a building can produce changes in the modelled loss cost of easily 100%. A second, more insidious element is the incorporation of secondary modifiers into the modelling. These can potentially bias results in a positive or negative direction. For example, all houses can be coded as having hurricane shutters. The interaction between the vulnerability model and the input data is an element of modelling that model analysts need to study carefully and communicate clearly to underwriters.

Data resolution effects

The quality of portfolio input data obviously plays a critical role in determining the reliability of model output, as already noted above with respect to the accurate classification of building and occupancy type. However, some further effects are also worth mentioning. Detailed data that includes information about the exact location and value of risks in a given portfolio allows models to accurately match the local hazard with the risks, and should provide a more reliable modelled result. However, as opposed to detailed level models, aggregated models are forced to make assumptions both about the geographical distribution and size of individual risks, both of which can lead to uncertainties. As just one example of such uncertainty, the impact that risk size assumptions in aggregate models have on the volatility of the cedant's retention is particularly high if the cedant has a gross reinsurance programme structure, as shown by the following study.

Retention volatility study

We analysed a real portfolio comprising different sized risks concentrated in a critical earthquake exposed area, using PartnerRe's aggregate level CatFocus earthquake model, as distinct from our detailed level model, to simulate the impact on the portfolio from three selected earthquake events of different magnitude. The events were selected to represent loss return periods of 30, 65 and 150 years.

For each event, the aggregate model generated an estimation of the mean damage ratio (MDR) to the portfolio, together with its standard deviation (a widespread measure of volatility). We then applied the results in the case of three different, but widely purchased, reinsurance structures - a net structure, a gross structure with a working excess of loss that excludes natural perils and a gross structure with a working excess of loss that includes natural perils.

The results showed that the reinsurance buyer is getting far more certainty of protection at any chosen level under the net, rather than the gross, structure. In the net case, there is a 10% chance that what on average should be a 65 year event turns out as bad as an average 90 year event. For the gross case, the uncertainty is higher, and there is a 10% chance that the loss will be as high as an average 175 year event.

If the cedant purchases cover up to the average 65 year event, it would have a 10% chance of only having 55 years of protection on the net structure. However, on the gross structure there is a 10% chance that the cedant is only protected from a 20 year event.

Coverage and loss settlement detail

The example of European windstorm Erwin in January 2005 illustrates the potential for missing exposure in a model due to the underlying coverage. In Sweden, a large proportion of property policies included coverage for trees. This cover only incepted once over 50% of the trees on a given a hectare land were blown down. As a result there had been no significant recent historical losses, and the underwriting and modelling communities under-estimated the risk. Tree damage from Erwin reached as much as 60% of their loss burden.

Other soft factors

These examples show a range of considerations from relatively hard, model related factors, to softer coverage issues. There are many further soft factors to be considered, including the buying strategy of the client, its business model (whether direct, broker or agency based) and the reliability of specific IT systems. Catastrophe risk underwriters assess these elements based on client visits or even via underwriting audits. Turning such factors into hard numbers is not easy, and they tend, in fact, to be used as part of the decision process around the minimum acceptable margin that is looked for in a reinsurance transaction.

Final price

The final price includes loading for capital and expenses, and is selected to achieve a pre-agreed target return on equity. The calculation of the capital associated with a deal inevitably involves more catastrophe modelling, with the focus on calculation of the economic capital required to support the portfolio of catastrophe business being written. For a given transaction, a key determinant of the required capital is its correlation with the main capital-driving perils.

A problem at this stage is the relative reliability of catastrophe models for various potentially peak regions. There is currently an enormous concentration on Atlantic hurricane, which is now arguably one of the very best modelled perils. The same focus has not yet developed for Japanese earthquake, European wind or flood. Stepping away from the main capital-driving perils, modelling becomes even less well developed, but there is generally a tendency to use model output for these perils as though it were equally valid. This may mean that too much diversification credit is being given in some non-peak markets as a result of poor modelling of both the expected loss costs and the required capital.

Conclusion

The above examples were chosen to indicate the sort of information that needs to be gathered and the skill set required within a catastrophe reinsurance underwriting unit. We have shown that a combination of detailed modelling understanding and insurance knowledge is essential to understand even this small subset of potential modelling issues.

We believe that catastrophe underwriting is done best when a team of specialists work together. The underwriters are responsible for understanding the policy coverages and insurance aspects and giving feed back to modellers about potential issues. Modellers are responsible for understanding the quirks of individual models, but beyond that for actively researching the potential effects of different model components and for providing underwriters with quantitative feedback. Both sides are responsible for questioning each other and identifying areas where more work is needed.

- Rick Thomas is head of the catastrophe underwriting team for PartnerRe's global (non-US) operation.

Email: rick.thomas@partnerre.com

Website: wwwpartnere.com + wwwpartnere.com