Daniel Roberts considers the limitations of quantitative risk management

Many years ago, in a land far away… is how all good stories should begin. And from those stories - sometimes we call them fairytales - we are supposed to take lessons.

My experience has taught me that quantification and modelling as a means of predicting potential outcomes can be a useful tool, or it can be horribly flawed. Models are only as good as the data and assumptions that go into them.

How many of us, for instance, included the possibility of a major geopolitical conflict in their Monte Carlo simulations for 2022, for instance?

Effective quantitative risk management relies heavily on the quality of the scenarios and assumptions. And this is perhaps best illustrated by a fairytale. So let us begin.

Once upon a time

Many years ago I was a Mainframe Systems Capacity Planner. This meant building complex models of mainframe systems based on performance data collected by the customer. The data would provide us with a picture of system utilisation at the CICS [customer information control system] partition and even at the major transaction level.

All subsystems would be measured, and we would build the model to simulate the resource usage.

The compute intensiveness of each application or subsystem would be simulated, and models run. The result was cross-checked against the actual system utilisation reports provided. Memory would be modelled, along with disk and network utilisation.

We built a model that would emulate the client’s mainframe environment down the second decimal place of utilisation by element.

From that model, once it was agreed that it actually did represent the customer’s system, we would apply a number of scenarios to determine when the system would reach capacity, so that any upgrades could be budgeted for early enough, and pre-orders placed.

This was in the days when lead-times for major system components (main memory, disk, and even CPU upgrade) could take three to six months for delivery. Six months of slow system response in an ATM network, for example, could result in customer queues and ultimately a loss of customers to rival banks.

And the upgrade components were not cheap. A major systems upgrade could be a board-level procurement request.

Normally, it would take two days to create and calibrate the model, and then we would spend three days running various scenarios. These could range from:

- Implementing a new applications or system

- Increasing the number of offices

- New users for an application or system

- The potential acquisition of a competitor or other business (and integration onto existing platforms)

- Increased functionality in (and consequent load from) an application or system

- Merging systems onto fewer but more powerful platforms

Or combinations of the above. Usually, the scenarios were connected to a business and strategic plan. The business plan said that there would be ten new branches opened in the coming year. We would model the impact based on the average load of branches of the size of the new branches.

A new application? How does that application compare to the existing application? Will it replace an application thus freeing up capacity? For packages, what were the load requirements or estimates provided by the vendor?

This was years ago. Less than a decade ago, a systems architect told me that the answer to all capacity problems is, “Just spool up another server”. That option was not available.

The role of modelling was to develop a quant-based assessment of potential futures so that strategic and operational planning could progress smoothly. Everything depended on the quality of the assumptions being made, and the realistic - or otherwise - nature of the scenarios or combinations of scenarios that were being assessed.

A spanner in the works

For one client, a large insurance company, we scheduled the capacity planning exercise some months in advance. We ensured the client gathered the appropriate systems performance data to feed into our initial model. Multiple trial runs were made to prepare and we were ready for the week.

On the first day, we were able to calibrate the model to represent the client’s mainframe environment to within a percentage point for all major applications and subsystems. And we were ready to calibrate major transactions and specific activities.

The second day was consumed by gaining exact calibrations of all applications and subsystems, and most key transactions (the system heavy activities identified from the performance data) to one decimal plan of accuracy.

By the middle of the third day, we had everything modelled to within two decimal places, and could replicate the results with multiple runs of the model.

Now we were ready to start running the scenarios. “Let’s see your main scenarios. We’ll start with a simple one,” I suggested.

To which the client responded, “Oh, I don’t have any right now. I’ll come up with some tonight, and we can run them tomorrow”.

Professionalism stopped me from blurting out something in appropriate.

Assumptions should be tested where possible. The client should have confirmed their strategic plan and operational requirements. Their development plans and priorities for the coming year. All these should have been known well before we began.

It’s all in the assumptions

Let us apply this cautionary tale to any Monte-Carlo Simulation (or other modelling methodology) exercise. The number of iterations that the model runs is irrelevant if the input assumptions have not been carefully considered and validated.

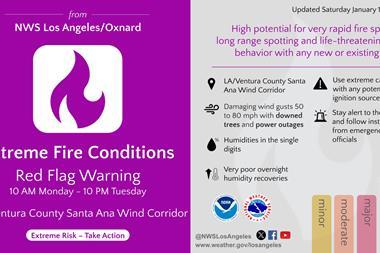

When modelling climate, if the starting assumptions do not include actual CO2 emissions - for instance - then the results may vary widely from predictions.

Likewise, if assumptions about the carrying capacity of the Amazon basin or the Siberian tundra are wrong, the modelled results will be wrong.

This is not to suggest that climate change is not real and the impact will not be extreme at current trajectories. But it does suggest that assumptions used need to be well-tested and continuously reassessed.

And climate scientists are doing exactly that - testing and confirming their input assumptions. They are certainly not going home and coming up with some scenarios overnight.

There are massive models, utilising many millions of times the computing power that I was using to model mainframes. And these supercomputers are looking at and analysing huge quantities of information and data.

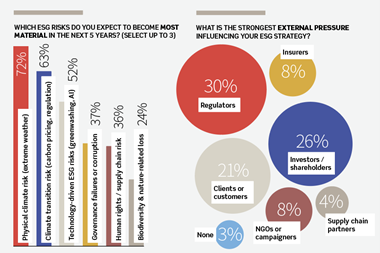

Insurance providers are able to provide quotes based on millions of (claims) events. Yet assumptions about future drivers of claims may be wrong.

Still, with millions of events used to calibrate models of future claims probabilities, in most cases unexpected future contributors to claims should not be so different as to completely invalidate the pricing models currently being used. However, the profitability of the overall insurance book might be impacted.

Historical loss experience does not always help us determine the future. During the height of the pandemic, personal behaviours changed dramatically - for instance - resulting in a direct impact on the validity of pre-pandemic modelling.

Models are just models

Coming back to the original point. Quantification of low-number events or situations borders on the meaningless. When the assumptions and scenarios are not well thought through, then a million-plus interactions and events simulation will not give future projections that can provide any real confidence.

In the case of most risks, the quality of “quantification” comes from the quality of internal networks and the ability to consider the range of potential impacts that could lead to the realisation of the risk, or achievement of the benefits.

It also relies on the openness of individuals to discuss potential bottlenecks, and a culture that seeks clarification over attribution of blame.

Therefore, when deciding whether a risk can be quantified, it is worth first considering if quantification of that particular risk will have any real meaning, beyond a simplistic “high, medium, low”.

There is a place for quantitative modelling of complex systems, high volume activities, and potential portfolio performance. Usually. However, there are many situations in which the greatest forecasting value comes not from models, but from the combined knowledge and experience of individuals.

Qualification of risks, in assessment, probability considerations and mitigation strategies, can deliver better results than ’following the numbers’.

I have also learned that if you control the assumptions, you can determine the results, regardless of the quantification tools and processes used.

Where quantification is desired, don’t forget to spend time and energy on the development and analysis of your assumptions.

Daniel Roberts is risk advisor and founder of GRMSi (Governance and Risk Management Services).

1 Readers' comment