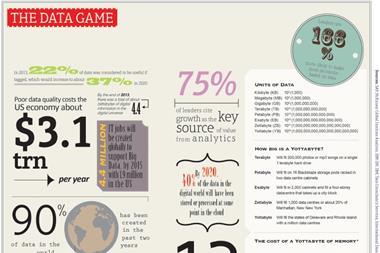

Panellists discuss the challenges in applying Big Data analytics to risk management

Big Data, and the value it offers to insurers, brokers and risk managers in the property and casualty markets, formed the basis of a heated debate at the risk managers panel yesterday.

The panel, hosted by Cathy Smith, was composed of four risk managers from international organisations including: Invensys vice-president, risk management and insurance, Chris McGloin; Tata Steel risk and insurance manager Annemarie Schouw; Stork and Fokker director of insurance Tjerk van Dijk; and Nestlé Group head of group risk services Andrew-Richard Bradley.

Bradley said that there is a need for Big Data but the ability to translate a large quantity of data for risk analysis purposes is lacking.

McGloin expressed his desire to see good quality data being used by insurers and risk managers: “Good quality risk information delivered to the right people in my company and at the right time is like gold dust.

“We pay a lot of money to [IT] providers to give us lots of information on things that are happening from a security perspective around the world – natural disasters – and that’s general information. If we could narrow it down to specific information around the supply chain then that becomes more valuable again.”

Schouw believes that the opportunity for risk managers to translate large quantities of data is there, but that at present there is not enough being done by the industry to implement Big Data’s use.

She said: “If we share more about claims and losses, then from the risk management and protective side we could do a lot more.”

Dijk agreed with his peers and pointed out how the financial industry is benefiting from the use of Big Data: “If banks can have data online, as they do for many years, then why can’t insurers for property and casualty markets?”

No comments yet