Forget sloth, gluttony and lust. These are the vices all risk managers must work hard to avoid

If risk management were a clinical, mathematical process similar to working out the mechanical stresses on a bridge, for example, we would all be a lot happier. Unfortunately, it also involves that squishy, unreliable construct - the human being. Forgetting this can lead the risk manager to overlook the deadly sins.

1. Complacency

When things are going well, organisations, like human beings, are tempted to relax and assume that tomorrow will be the same as today. The focus is on perfecting past success, not on innovation. This comfortable delusion can be rapidly disturbed, perhaps by an unforeseen event, but more probably by the increasing rapidity of technological change. Kodak, one of whose employees actually invented digital photography, is the latest in a long line of seemingly invincible companies that failed to move with the market. Founded in 1892, it applied for bankruptcy in 2012.

2. Believing the numbers

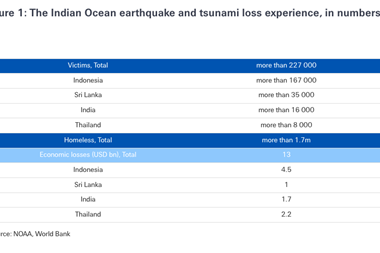

Look no further than the credit crunch for examples of placing too much belief in data. Models have their place, but data can be wrong and assumptions can turn out to be false. A ‘thousand-year event’ could happen tomorrow. The Fukushima nuclear disaster is an example of this. Anti-tsunami barriers were 5.7 metres high; the height of the wave exceeded 10 metres.

3. Attempting clairvoyance

It is tempting to rely on past experience to predict the future, especially if there are datasets going back decades. But this habit can be fatal, especially if it infects decision-making at a strategic level. Many retail companies have been mortally wounded by assuming that shopping patterns were fixed and ignoring the exponential growth in online buying.

4. Intelligence failure

Organisations that do not listen carefully enough to their customers or their workforce are vulnerable. Presentations or reports that aggregate opinions are seldom a substitute for hearing directly from individual customers or staff. It is the best, perhaps the only, way of spotting problems before they develop into something worse. The notorious failure of call centres to live up to customer expectations took a long time to remedy, partly because the cost savings were so attractive that the voice of the customer was ignored. Result: badly damaged reputations for the firms that did nothing.

5. Credulity

It’s an age-old delusion that because something is expensive, it must be good. The opposite is all too often true. This is particularly so with IT projects - many organisations remain locked into IT systems that have never really worked well, but are too expensive to dump. Probably the best example ever is the UK’s ‘Connecting for Health’ - which was to take the NHS into the electronic age. Begun in 2002, it has consumed £12.4bn (€14.9bn), and is still not dead. In August 2011, a damning parliamentry report noted the failure. “The department has been unable to deliver its original aim of a fully integrated care records system across the NHS.”

6. Cowardice

This is more common than bravery, despite the fact that bravery brings benefits. Cowardice is seen when things go wrong - first denial of responsibility, then grudging admission, then hiding behind lawyers, and finally niggardliness about putting things right. This sin is visible in a list of disasters and mistakes too long for comfort, with the 1989 wreck of the Exxon Valdez at the top. Growing evidence that chief executives are recognising the need to own up and communicate properly is welcome, but somewhat tarnished by the fact that organisational cowardice on a smaller scale - usually when dealing with whistleblowers - still infects many organisations.

7. Obfuscation

Obfuscation is the direct opposite of clear communication. It is a sin, and a worse sin still if it is done deliberately. On a daily level it runs through most organisations like a disease, with jargon, metaphors and cliches that mean little

or nothing being used to give the impression of endeavour and achievement. Obfuscation is not confined to language - it

can appear in numbers, charts and graphs. The risk is that things that should be clear are subject to misunderstanding, and that false impressions are given that things are better than they are. When the habit of wrapping communication in ambiguity spreads outside the organisation to the media interview or the annual report, the risk is heightened: stakeholders are not reassured by jargon.

Andrew Leslie is european editor of StrategicRISK

No comments yet